I am a MPhil student at HKUST (Guangzhou)  , supervised by Prof. Ying-Cong Chen.

, supervised by Prof. Ying-Cong Chen.

I am currently conducting research in 3D vision, generative models, and neural rendering. My recent work focuses on 3D/4D asset generation, photometric stereo, and multi-view generation. If you are interested in academic collaboration, please feel free to contact me via email at jlin695@connect.hkust-gz.edu.cn. I am also open to research internship opportunities in related fields!

I obtained my bachelor’s degree from Jinan University (暨南大学), majoring in Electronic Information Science & Technology. During my undergraduate studies, I built a solid foundation in computer vision, computer graphics, and AI-driven 3D modeling.

🔥 News

- 2025.06: 🎉 three papers are accepted by ICCV 2025 🍻

- 2025.03: 🎉 we released the code and demo for kiss3dgen

- 2025.03: 🎉 one paper is accepted by CVPR 2025

📝 Publications

3D Generation/Reconstruction

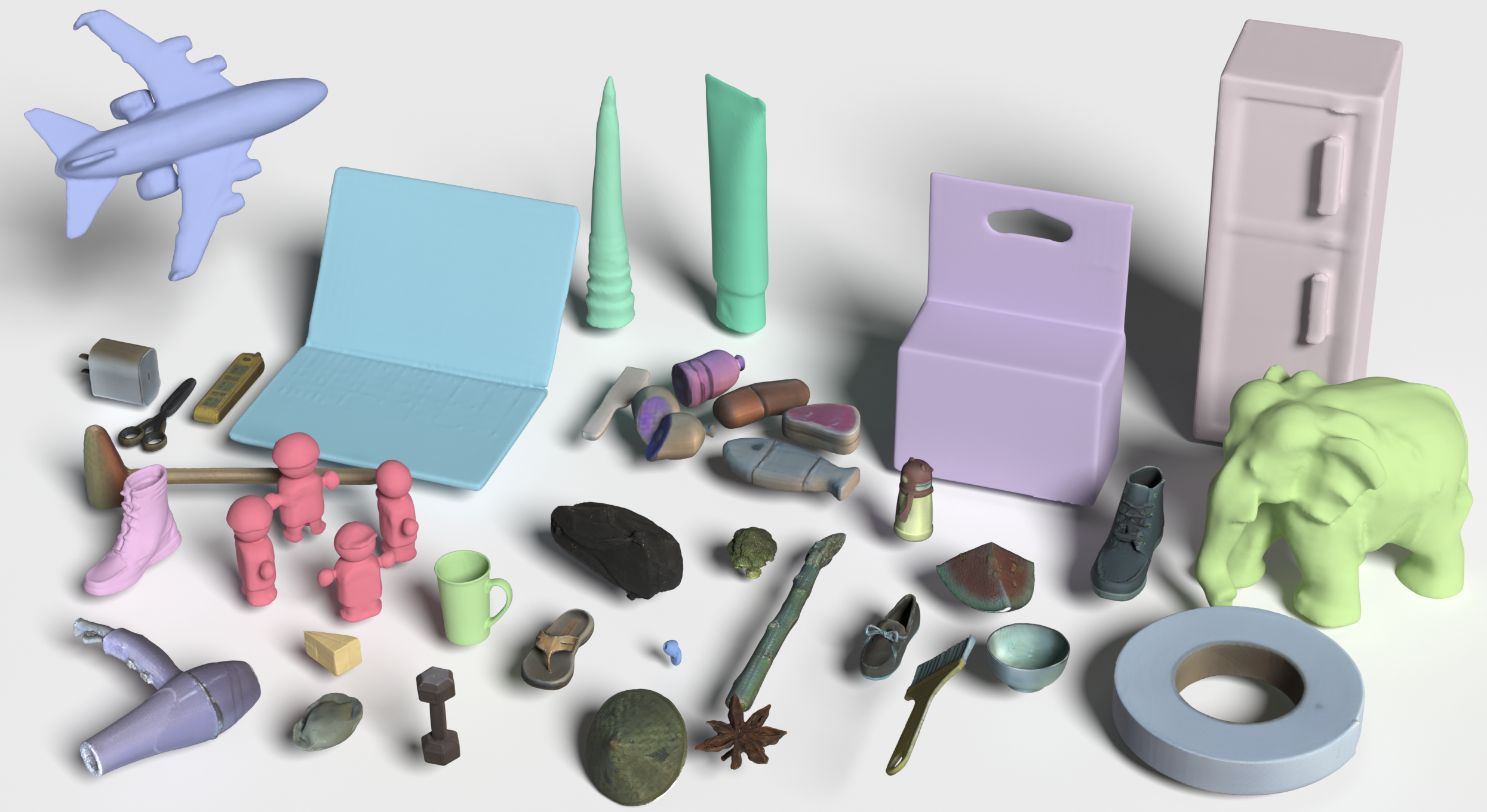

Kiss3DGen: Repurposing Image Diffusion Models for 3D Asset Generation

📄 Paper (arXiv)

Jiantao Lin*, Xin Yang*, Meixi Chen*, Yingjie Xu, Dongyu Yan, Leyi Wu, Xinli Xu, Lie Xu, Shunsi Zhang, Ying-Cong Chen

Project Page | Code | Demo

- This work explores utilizing 2D diffusion models for 3D asset generation.

- Improves quality and diversity in 3D shape synthesis.

DiMeR: Disentangled Mesh Reconstruction Model

📄 Paper (arXiv)

Lutao Jiang*, Jiantao Lin*, Kanghao Chen*, Wenhang Ge*, Xin Yang, Yifan Jiang, Yuanhuiyi Lyu, Xu Zheng, Yinchuan Li, Yingcong Chen

Project Page | Code | Demo

- Proposes DiMeR, a geometry-texture disentangled model with 3D supervision for improved sparse-view mesh reconstruction.

- Enhances reconstruction accuracy and efficiency by separating inputs and simplifying mesh extraction.

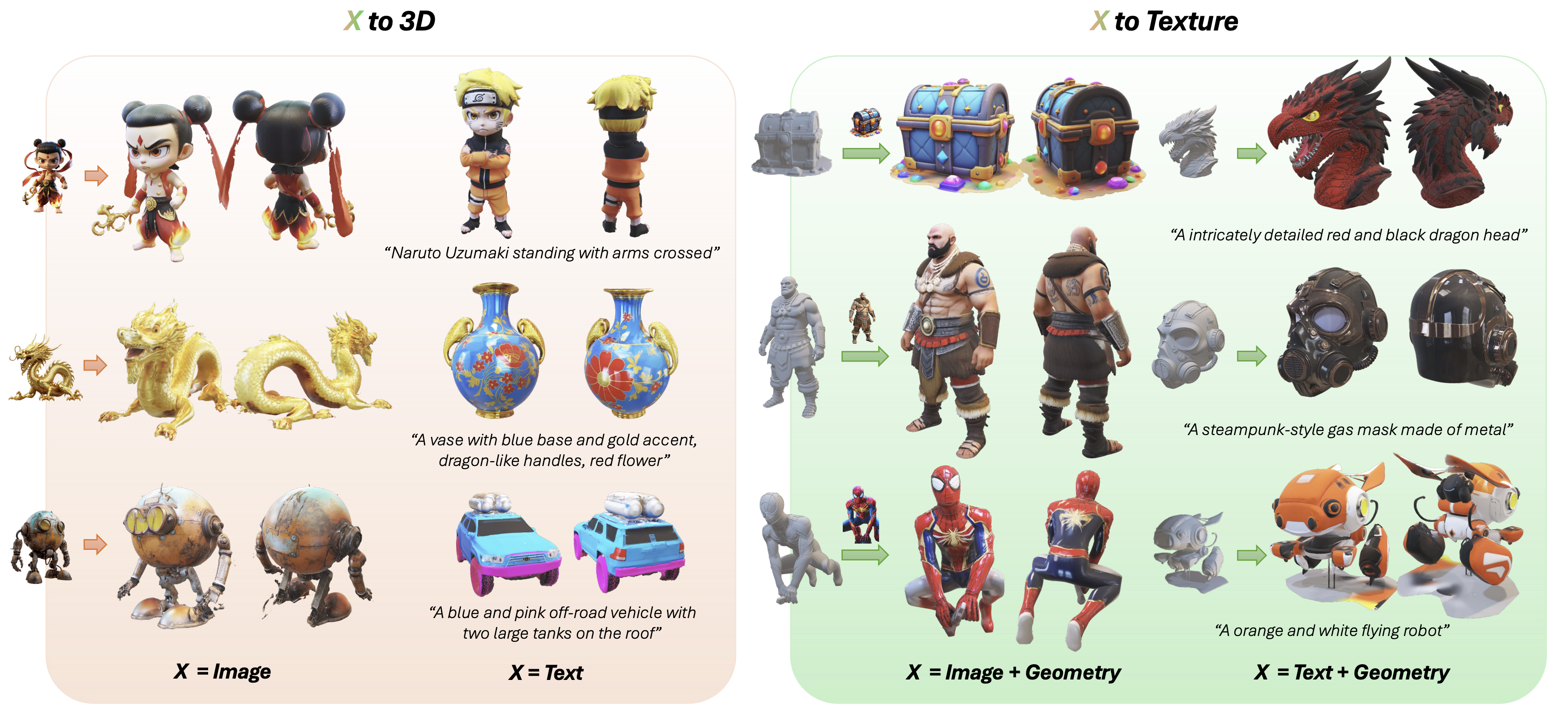

Advancing high-fidelity 3D and Texture Generation with 2.5D latents

📄 Paper (arXiv)

Xin Yang*, Jiantao Lin*, Yingjie Xu, Haodong Li, Yingcong Chen

- Proposes a unified framework for joint 3D geometry and texture generation using versatile 2.5D representations.

- Improves coherence and quality in text- and image-conditioned 3D generation via 2D foundation models and a lightweight 2.5D-to-3D decoder.

FlexPainter: Flexible and Multi-View Consistent Texture Generation

📄 Paper (arXiv)

Dongyu Yan*, Leyi Wu*, Jiantao Lin, Luozhou Wang, Tianshuo Xu, Zhifei Chen, Zhen Yang, Lie Xu, Shunsi Zhang, Yingcong Chen

- Proposes FlexPainter, a multi-modal diffusion-based pipeline for flexible and consistent 3D texture generation.

- Enhances control and coherence by unifying input modalities, synchronizing multi-view generation, and refining textures with 3D-aware models.

(HighLight) PRM: Photometric Stereo-based Large Reconstruction Model

📄 Paper (arXiv)

Wenhang Ge*, Jiantao Lin*, Jiawei Feng, Guibao Shen, Tao Hu, Xinli Xu, Ying-Cong Chen

Project Page | Code | Demo

- A large-scale photometric stereo reconstruction framework.

- Enhances lighting-aware 3D shape recovery with high fidelity.

(HighLight) LucidDreamer: Towards High-Fidelity Text-to-3D Generation via Interval Score Matching

📄 Paper (arXiv)

Yixun Liang*, Xin Yang*, Jiantao Lin, Haodong Li, Xiaogang Xu, Ying-Cong Chen

- Proposes a novel interval score matching approach for text-to-3D generation.

- Significantly improves 3D shape realism and fidelity.

2D Generation

FlexGen: Flexible Multi-View Generation from Text and Image Inputs

📄 Paper (arXiv)

Xinli Xu*, Wenhang Ge*, Jiantao Lin*, Jiawei Feng, Lie Xu, Hanfeng Zhao, Shunsi Zhang, Ying-Cong Chen

- A multi-view generation model that fuses text and image inputs.

- Enables controllable and high-quality multi-view synthesis.

SG-Adapter: Enhancing Text-to-Image Generation with Scene Graph Guidance

📄 Paper (arXiv)

Guibao Shen*, Luozhou Wang*, Jiantao Lin, Wenhang Ge, Chaozhe Zhang, Xin Tao, Yuan Zhang, Pengfei Wan, Zhongyuan Wang, Guangyong Chen, Yijun Li, Ying-Cong Chen.

- Introduces scene graph constraints into text-to-image generation.

- Improves structure and semantic consistency in generated images.

2D Recognition

Graph Representation and Prototype Learning for Webly Supervised Fine-Grained Image Recognition

📄 Paper (Pattern Recognition Letters)

Jiantao Lin, Tianshui Chen, Ying-Cong Chen, Zhijing Yang, Yuefang Gao

- Proposes a novel graph-based method for fine-grained image recognition under weak supervision.

- Learns better category structures for challenging datasets.

Learning Consistent Global-Local Representation for Cross-Domain Facial Expression Recognition

📄 Paper (ICPR 2022)

Yuhao Xie, Yuefang Gao, Jiantao Lin, Tianshui Chen

- Introduces a domain-adaptive method for cross-domain facial expression recognition.

- Bridges the gap between different facial datasets through global-local feature alignment.

🎖 Honors and Awards

- 2022.10 First place in “Changlu Cup” Student Science and Technology Innovation Competition (Jinan University and Zhuhai Longtec Corp., Ltd)

- 2022.04 Meritorious Winner of Mathematical Contest in Modeling (The Consortium for Mathematics and Its Application)

- 2022.03 Outstanding Student Scholarship (Jinan University)

- 2021.12 Second Prize of Guangdong Province, National Student Mathematical Modeling Competition (Chinese Society of Industrial and Applied Mathematics)

- 2021.11 National University Students Electrical Math Modeling Competition (Chinese Society for Electrical Engineering, Electrical Math Committee of CSEE)

- 2021.10 Outstanding Student Scholarship (Jinan University)

📖 Educations

- 2023.09 - Present, MPhil, The Hong Kong University of Science and Technology (Guangzhou), China.

- 2019.09 - 2023.06, Undergraduate, Jinan University, China.